-

-

Notifications

You must be signed in to change notification settings - Fork 363

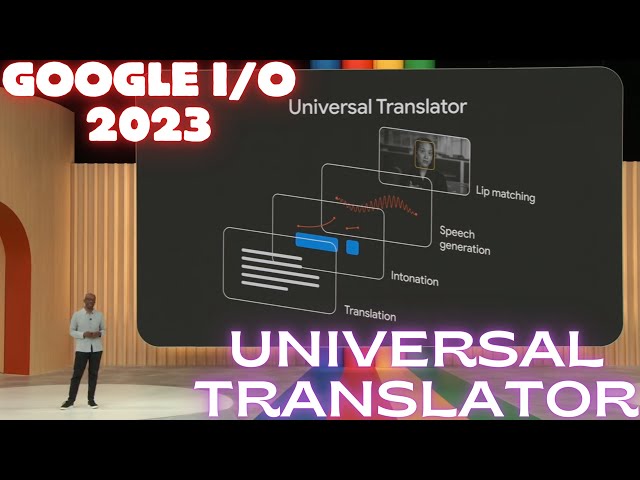

Google IO 2023 AlphaFold Fake Image Check and Universal Translator With Dubbing And Lip Synching

Full tutorial link > https://www.youtube.com/watch?v=rrBS9rcIcWk

🔥Hot off the stage from #GoogleIO, hear James, the leader of a new area at Google called Technology and Society, discuss the fascinating interplay between #AI and society.

In this video, James addresses the need for #responsibleAI and how Google intends to tackle it. From the brilliant successes of Google's DeepMind's #AlphaFold, to the complex challenges of misinformation and deepfakes, he discusses Google's commitment to addressing the benefits and risks of AI with their principles established in 2018.

He shares exciting developments in evaluating online information and introduces innovative tools like 'About This Image' in Google Search, Google Lens, and watermarking in generative models. He also presents an impressive example of Universal Translate, an experimental AI video dubbing service, and discusses its potential benefits and inherent risks.

Moreover, he talks about Google's initiatives in mitigating toxic outputs of AI models, including automated adversarial testing and the use of Perspective API.

Watch to find out how Google plans to work with researchers, social scientists, industry experts, governments, creators, publishers, and everyday people to build a responsible AI ecosystem.

Stay tuned till the end for a handoff to Samir, who will share exciting developments in Android. Enjoy the watch! 🚀

Source Google. Full Event (10 May 2023)

https://www.youtube.com/watch?v=cNfINi5CNbY

Our Discord server

https://bit.ly/SECoursesDiscord

If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on 🥰

https://www.patreon.com/SECourses

Technology & Science: News, Tips, Tutorials, Tricks, Best Applications, Guides, Reviews

https://www.youtube.com/playlist?list=PL_pbwdIyffsnkay6X91BWb9rrfLATUMr3

Playlist of StableDiffusion Tutorials, Automatic1111 and Google Colab Guides, DreamBooth, Textual Inversion / Embedding, LoRA, AI Upscaling, Pix2Pix, Img2Img

https://www.youtube.com/playlist?list=PL_pbwdIyffsmclLl0O144nQRnezKlNdx3

#Google #GoogleIO #TechnologyandSociety #DeepMind #AI #ResponsibleAI #AIprinciples #Deepfakes #Misinformation #UniversalTranslate #AdversarialTesting #PerspectiveAPI #Android #JamesAtGoogleIO #SamirAtGoogleIO

-

00:00:09 Hi everyone. I'm James.

-

00:00:12 In addition to research, I lead a new area at Google called technology and society.

-

00:00:20 Growing up in Zimbabwe, I could not have imagined all the amazing and groundbreaking innovations

-

00:00:26 that have been presented on this stage today. While I feel it's important to celebrate the

-

00:00:33 incredible progress in AI and the immense potential that it has for people and society

-

00:00:38 everywhere, we must also acknowledge that it's an emerging technology that is still being

-

00:00:44 developed and there's still so much more to do.

-

00:00:49 Earlier you heard Sundar say that our approach to AI must be both bold and responsible.

-

00:00:56 While there's a natural tension between the two, we believe it's not only possible but

-

00:01:03 in fact critical to embrace that tension productively.

-

00:01:08 The only way to be truly bold in the long term is to be responsible from the start.

-

00:01:15 Our field defining research is helping scientists make bold advances in many scientific fields,

-

00:01:22 including medical breakthroughs. Take for example Google DeepMind's AlphaFold,

-

00:01:27 which can accurately predict the 3D shapes of 200 million proteins.

-

00:01:33 That's nearly all the catalogue proteins known to science.

-

00:01:37 AlphaFold gave us the equivalent of nearly 400 million years of progress in just weeks.

-

00:01:52 So far more than one million researchers around the world have used AlphaFold's predictions,

-

00:01:58 including Feng Xiang's pioneering lab at the Broad Institute of MIT and Harvard.

-

00:02:08 In fact in March this year, Zhang and his colleagues at MIT announced that they'd used

-

00:02:15 AlphaFold to develop a novel molecular syringe which could deliver drugs to help improve

-

00:02:21 the effectiveness of treatments for diseases like cancer.

-

00:02:32 And while it's exhilarating to see such bold and beneficial breakthroughs, AI also has

-

00:02:39 the potential to worsen existing societal challenges like unfair bias, as well as pose

-

00:02:46 new challenges as it becomes more advanced and new uses emerge.

-

00:02:51 That's why we believe it's imperative to take a responsible approach to AI.

-

00:02:57 This work centers around our AI principles that we first established in 2018.

-

00:03:03 These principles guide product development and they help us assess every AI application.

-

00:03:10 They prompt questions like, will it be socially beneficial or could it lead to harm in any

-

00:03:17 way? One area

-

00:03:20 that is top of mind for us is misinformation. Generative AI makes it easier than ever to create

-

00:03:28 new content, but it also raises additional questions about its trustworthiness.

-

00:03:35 That's why we're developing and providing people with tools to evaluate online information.

-

00:03:40 For example, have you come across a photo on a website or one shared by a friend with

-

00:03:48 very little context, like this one at the moon landing, and found yourself wondering,

-

00:03:53 is this reliable? I have and I'm sure many of you have as well.

-

00:03:59 In the coming months, we're adding two new ways for people to evaluate images.

-

00:04:05 First, with our About This Image tool in Google Search,

-

00:04:09 you'll be able to see important information

-

00:04:12 such as when and where similar images may have first appeared, where else the image

-

00:04:19 has been seen online, including news, fact checking, and social sites, all this providing

-

00:04:25 you with helpful context to determine if it's reliable.

-

00:04:30 Later this year, you'll also be able to use it if you search for an image or screenshot

-

00:04:34 using Google Lens, or when you're on websites in Chrome.

-

00:04:41 As we begin to roll out the generative image capabilities, like Sundar mentioned, we will

-

00:04:47 ensure that every one of our AI generated images has metadata and markup in the original

-

00:04:54 file to give you context if you come across it outside of our platforms.

-

00:05:00 Not only that, creators and publishers will be able to add similar metadata, so you'll

-

00:05:06 be able to see a label in images in Google Search marking them as AI generated.

-

00:05:21 As we apply our AI principles, we also start to see potential tensions when it comes to

-

00:05:28 being bold and responsible. Here's an example.

-

00:05:32 Universal Translate is an experimental AI video dubbing service that helps experts translate

-

00:05:39 a speaker's voice while also matching their lip movements.

-

00:05:44 Let me show you how it works with an online college course created in partnership with

-

00:05:49 Arizona State University. What many college students don't realize

-

00:05:54 is that knowing when to ask for help and then following through on using helpful resources is

-

00:05:59 actually a hallmark of becoming a productive adult.

-

00:06:19 We use next generation translation models to translate what the speaker is saying, models

-

00:06:26 to replicate the style and the tone, and then match the speaker's lip movements.

-

00:06:31 Then we bring it all together.

-

00:06:34 This is an enormous step forward for learning comprehension, and we're seeing promising

-

00:06:39 results with course completion rates. But there's an inherent tension here.

-

00:06:45 You can see how this can be incredibly beneficial, but some of the same underlying technology

-

00:06:52 could be misused by bad actors to create deep fakes.

-

00:06:57 So we've built this service with guardrails to help prevent misuse,

-

00:07:01 and we make it accessible only to authorized partners.

-

00:07:12 And as Sundar mentioned, soon we'll be integrating new innovations in watermarking into our latest

-

00:07:19 generative models to also help with the challenge of misinformation.

-

00:07:25 Our AI principles also help guide us on what not to do.

-

00:07:31 For instance, years ago, we were the first major company to decide not to make a general

-

00:07:37 purpose facial recognition API commercially available.

-

00:07:42 We felt there weren't adequate safeguards in place.

-

00:07:46 Another way we live up to our AI principles is with innovations to tackle challenges as

-

00:07:52 they emerge, like reducing the risk of problematic outputs that may be generated by our models.

-

00:07:59 We are one of the first in the industry to develop and launch automated adversarial testing

-

00:08:06 using large language model technology. We do this for queries like this

-

00:08:12 to help uncover and reduce inaccurate outputs, like the one

-

00:08:16 on the left, and make them better, like the one on the right.

-

00:08:21 We're doing this at a scale that's never been done before at Google, significantly improving

-

00:08:26 the speed, quality, and coverage of testing, allowing safety experts to

-

00:08:32 focus on the most difficult cases.

-

00:08:36 And we're sharing these innovations with others. For example, our Perspective API, originally

-

00:08:42 created to help publishers mitigate toxicity, is now being used in large language models.

-

00:08:49 Scientific researchers have used our Perspective API to create an industry evaluation standard.

-

00:08:56 And today, all significant large language models, including those from OpenAI and Anthropic,

-

00:09:02 incorporate this standard to evaluate toxicity generated by their own models.

-

00:09:18 Building AI responsibly must be a collective effort involving researchers, social scientists,

-

00:09:26 industry experts, governments, and everyday people.

-

00:09:31 As well as creators and publishers, everyone benefits from a vibrant content ecosystem

-

00:09:38 today and in the future.

-

00:09:41 That's why we're getting feedback and we'll be working with the web community on ways

-

00:09:46 to give publishers choice and control over their web content.

-

00:09:52 It's such an exciting time. There's so much we can accomplish

-

00:09:57 and so much we must get right together. We look forward to working with all of you.

-

00:10:04 And now I'll hand it off to Samir, who will speak to you about all the exciting developments

-

00:10:09 we're bringing to Android. Thank you.