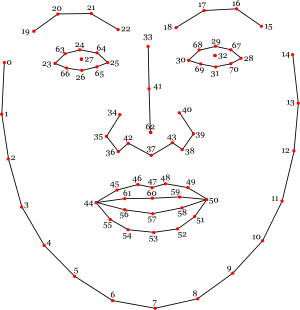

clmtrackr is a javascript library for fitting facial models to faces in videos or images. It currently is an implementation of constrained local models fitted by regularized landmark mean-shift, as described in Jason M. Saragih's paper. clmtrackr tracks a face and outputs the coordinate positions of the face model as an array, following the numbering of the model below:

The library provides some generic face models that were trained on the MUCT database and some additional self-annotated images. Check out clmtools for building your own models.

For tracking in video, it is recommended to use a browser with WebGL support, though the library should work on any modern browser.

For some more information about Constrained Local Models, take a look at Xiaoguang Yan's excellent tutorial, which was of great help in implementing this library.

clmtrackr is distributed under the MIT License