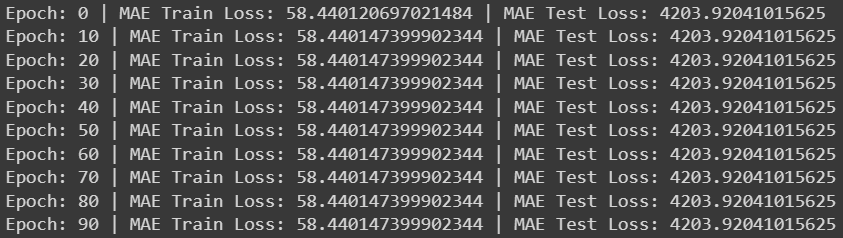

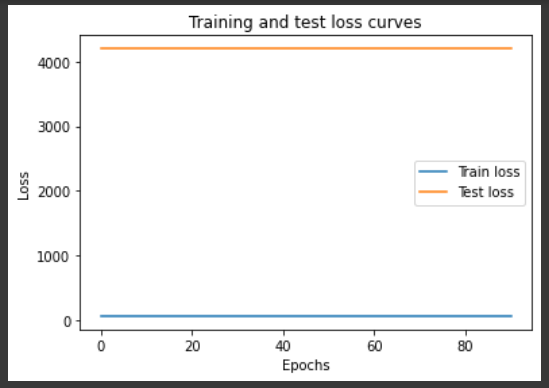

MAE train & MAE test loss functions give same values over multiple epochs, effectively no training being done. #311

-

|

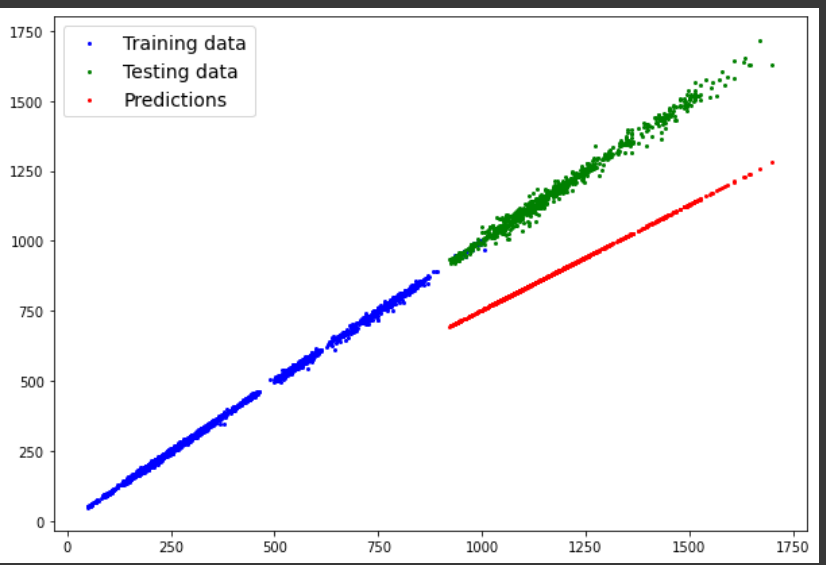

Most of the code is from the pytorch_workflow.ipynb file, I changed the dataset to be google stock data I found online. In the zip file is the code I have written. If you run through the cells and get to the loss calculation, the test and training loss is: Even if I change the number of epochs to a higher number, the calculations do not change, they remain at the same number instead of decreasing. In graph form, it looks like this: Does anyone have an idea of why this happens? |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 2 replies

-

|

The architecture of neural networks in "Workflow.ipynb" does not allow them to learn complex patterns in stock data. In the workflow notebook the neural network architecture consists only of linear layers and do not have activation functions in between the layers to account for non linearity. Hence, increasing the epochs is only one of the solution to improve the performance of the model . There are also many other ways to teach the model different patterns and in the future workbook Daniel specifies what other things could be changed in the architecture to make the model better. Hope this clarifies your question. |

Beta Was this translation helpful? Give feedback.

The architecture of neural networks in "Workflow.ipynb" does not allow them to learn complex patterns in stock data. In the workflow notebook the neural network architecture consists only of linear layers and do not have activation functions in between the layers to account for non linearity. Hence, increasing the epochs is only one of the solution to improve the performance of the model . There are also many other ways to teach the model different patterns and in the future workbook Daniel specifies what other things could be changed in the architecture to make the model better. Hope this clarifies your question.