pytorch-lightning output embeddings completely differnt than pytorch vanilla #9873

-

|

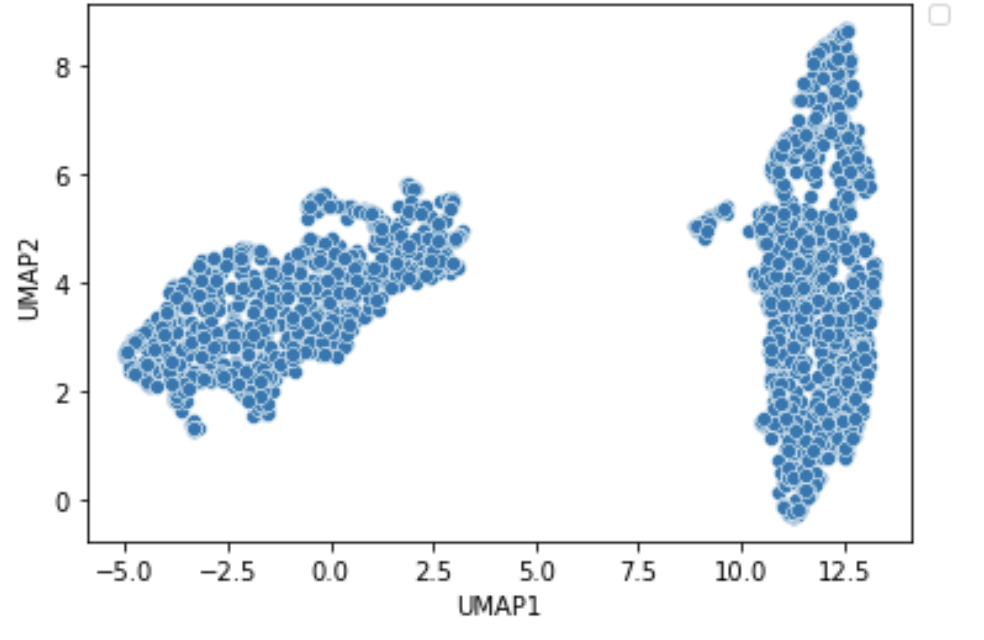

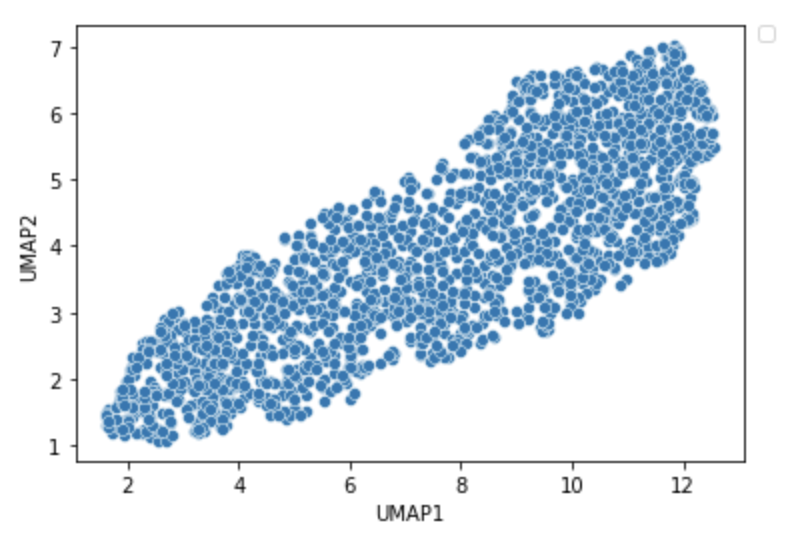

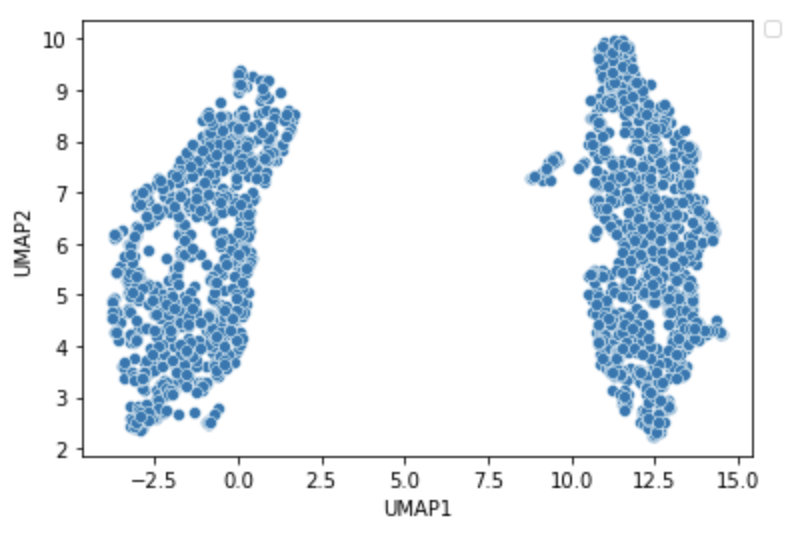

Hey pytorch-lightning team, I am trying to build an autoencoder architecture via pytorch-lightning (newbie), which I observe its advantages while running complex models in comparison to pytorch implementation. However in this AE vanilla model, I observe significant difference in the embedding output of pytorch-lightning vs pytorch where I expect that pytorch-lightning should give the exact UMAP embedding as in pytorch and here is an example:Pytorch implementationHere is the UMAP plot for pytorch embedding:Pytorch-lightning implementation for the same Pytorch AE model aboveHere is the UMAP plot for pytorch-lightning embedding :Since I have experience with this data type and the data generative process, which give me confidence to believe in the pytorch output Any hints or explanations would be highly appreciated! Thank you in advance for your help. Best wishes, |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 1 reply

-

|

Hi model = Autoencoder()

encodings = get_encodings(model, data_loader)you need to load a checkpoint here model = AutoEncoder.load_from_checkpoint(ckpt_path)

encodings = get_encodings(model, data_loader) |

Beta Was this translation helpful? Give feedback.

Hi

here, I think you are reinitializing the model and using the one with random weights.

you need to load a checkpoint here